Facebook’s generation of ‘Jew Hater’ and other advertising categories prompts system inspection

![]()

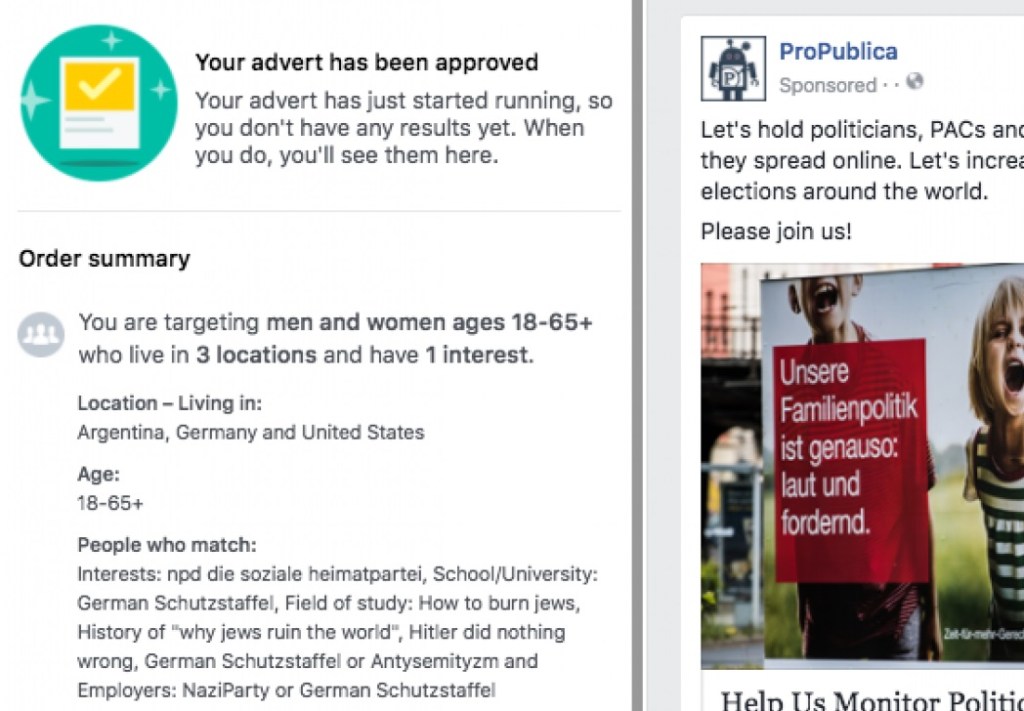

Facebook automatically generates categories advertisers can target, such as “jogger” and “activist,” based on what it observes in users’ profiles. Usually that’s not a problem, but ProPublica found that Facebook had generated anti-Semitic categories such as “Jew Hater” and “Hitler did nothing wrong,” which could be targeted for advertising purposes.

The categories were small — a few thousand people total — but the fact that they existed for official targeting (and in turn, revenue for Facebook) rather than being flagged raises questions about the effectiveness — or even existence — of hate speech controls on the platform. Although surely countless posts are flagged and removed successfully, the failures are often conspicuous.

ProPublica, acting on a tip, found that a handful of categories autocompleted themselves when their researchers entered “jews h” into the advertising category search box. To verify these were real, they bundled a few together and bought an ad targeting them, which indeed went live.

Upon being alerted, Facebook removed the categories and issued a familiar-sounding strongly worded statement about how tough on hate speech the company is:

Upon being alerted, Facebook removed the categories and issued a familiar-sounding strongly worded statement about how tough on hate speech the company is:

We don’t allow hate speech on Facebook. Our community standards strictly prohibit attacking people based on their protected characteristics, including religion, and we prohibit advertisers from discriminating against people based on religion and other attributes. However, there are times where content is surfaced on our platform that violates our standards. In this case, we’ve removed the associated targeting fields in question. We know we have more work to do, so we’re also building new guardrails in our product and review processes to prevent other issues like this from happening in the future.

The problem occurred because people were listing “jew hater” and the like in their “field of study” category, which is of course a good one for guessing what a person might be interested in: meteorology, social sciences, etc. Although the numbers were extremely small, that shouldn’t be a barrier to an advertiser looking to reach a very limited group, like owners of a rare dog breed.

But as difficult as it might be for an algorithm to determine the difference between “History of Judaism” and “History of ‘why Jews ruin the world,’” it really does seem incumbent on Facebook to make sure an algorithm does make that determination. At the very least, when categories are potentially sensitive, dealing with personal data like religion, politics, and sexuality, one would think they would be verified by humans before being offered up to would-be advertisers.

Facebook told TechCrunch that it is now working to prevent such offensive entries in demographic traits from appearing as addressable categories. Of course, hindsight is 20/20, but really — only now it’s doing this?

It’s good that measures are being taken, but it’s kind of hard to believe that there was not some kind of flag list that watched for categories or groups that clearly violate the no-hate-speech provision. I asked Facebook for more details on this, and will update the post if I hear back.

Update: As Harvard’s Joshua Benton points out on Twitter, one can also target the same groups for Google ad words:

I feel like this is different somehow, although still troubling. You could put nonsense words into those keyword boxes and they would be accepted. On the other hand, Google does suggest related anti-Semitic phrases in case you felt like “Jew haters” wasn’t broad enough:

To me the Facebook mechanism seems more like a selection by Facebook of existing, quasi-approved (i.e. hasn’t been flagged) profile data it thinks fits what you’re looking for, while Google’s is a more senseless association of queries it’s had — and it has less leeway to remove things, since it can’t very well not allow people to search for ethnic slurs or the like. But obviously it’s not that simple. I honestly am not quite sure what to think.