Is Facebook’s Anti-Abuse System Broken?

Facebook has built some of the most advanced algorithms for tracking users, but when it comes to acting on user abuse reports about Facebook groups and content that clearly violate the company’s “community standards,” the social media giant’s technology appears to be woefully inadequate.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

Last week, Facebook deleted almost 120 groups totaling more than 300,000 members. The groups were mostly closed — requiring approval from group administrators before outsiders could view the day-to-day postings of group members.

However, the titles, images and postings available on each group’s front page left little doubt about their true purpose: Selling everything from stolen credit cards, identities and hacked accounts to services that help automate things like spamming, phishing and denial-of-service attacks for hire.

To its credit, Facebook deleted the groups within just a few hours of KrebsOnSecurity sharing via email a spreadsheet detailing each group, which concluded that the average length of time the groups had been active on Facebook was two years. But I suspect that the company took this extraordinary step mainly because I informed them that I intended to write about the proliferation of cybercrime-based groups on Facebook.

That story, Deleted Facebook Cybercrime Groups had 300,000 Members, ended with a statement from Facebook promising to crack down on such activity and instructing users on how to report groups that violate it its community standards.

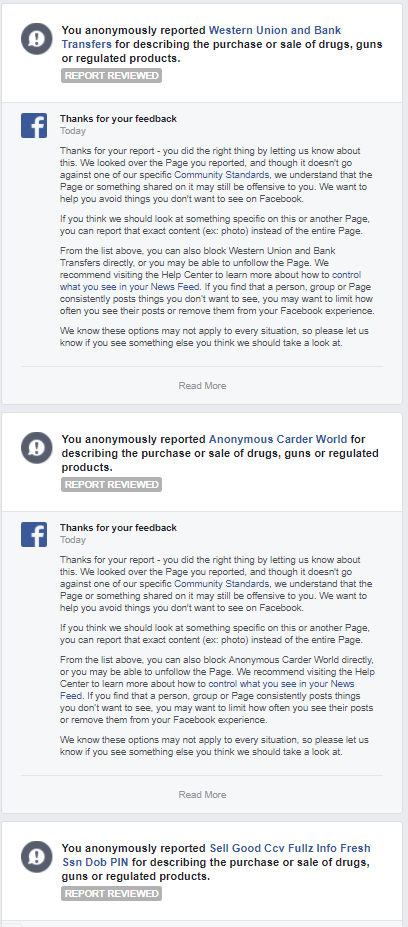

In short order, some of the groups I reported that were removed re-established themselves within hours of Facebook’s action. I decided instead of contacting Facebook’s public relations arm directly that I would report those resurrected groups and others using Facebook’s stated process. Roughly two days later I received a series replies saying that Facebook had reviewed my reports but that none of the groups were found to have violated its standards. Here’s a snippet from those replies:

Perhaps I should give Facebook the benefit of the doubt: Maybe my multiple reports one after the other triggered some kind of anti-abuse feature that is designed to throttle those who would seek to abuse it to get otherwise legitimate groups taken offline — much in the way that pools of automated bot accounts have been known to abuse Twitter’s reporting system to successfully sideline accounts of specific targets.

Or it could be that I simply didn’t click the proper sequence of buttons when reporting these groups. The closest match I could find in Facebook’s abuse reporting system were, “Doesn’t belong on Facebook,” and “Purchase or sale of drugs, guns or regulated products.” There was/is no option for “selling hacked accounts, credit cards and identities,” or anything of that sort.

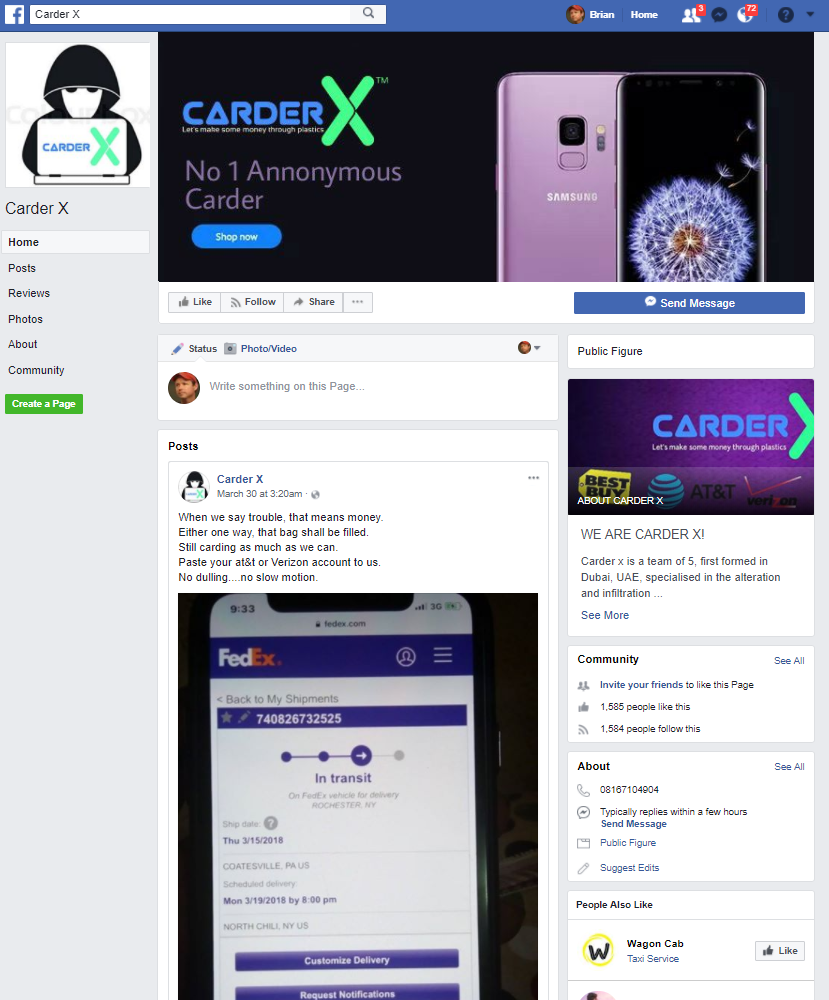

In any case, one thing seems clear: Naming and shaming these shady Facebook groups via Twitter seems to work better right now for getting them removed from Facebook than using Facebook’s own formal abuse reporting process. So that’s what I did on Thursday. Here’s an example:

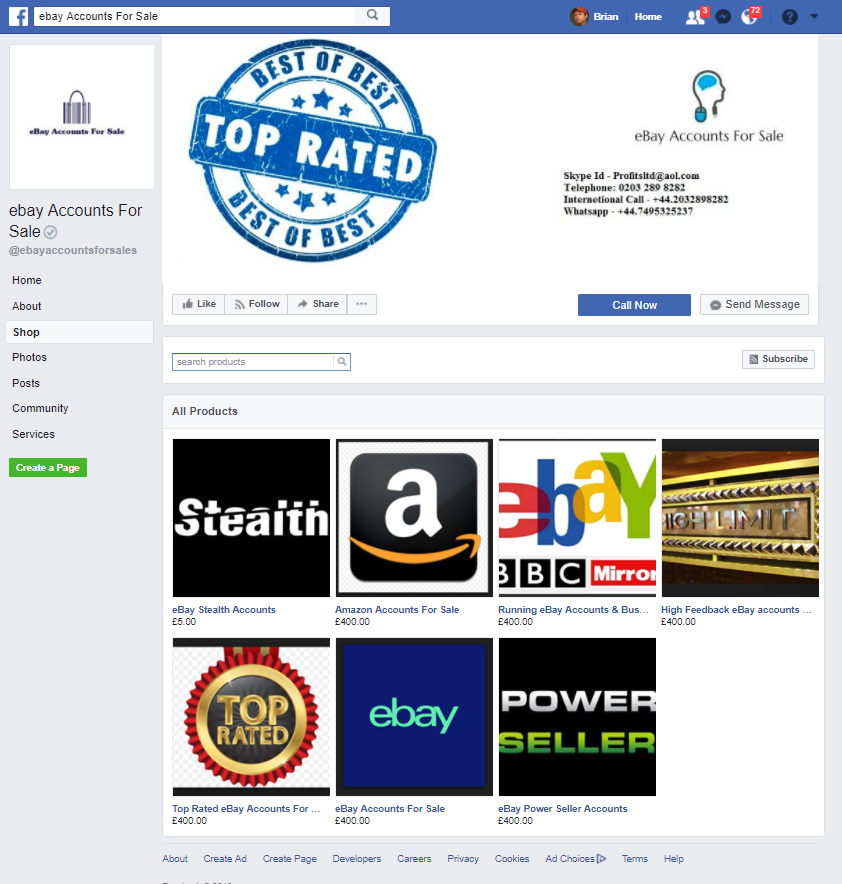

Within minutes of my tweeting about this, the group was gone. I also tweeted about “Best of the Best,” which was selling accounts from many different e-commerce vendors, including Amazon and eBay:

That group, too, was nixed shortly after my tweet. And so it went for other groups I mentioned in my tweetstorm today. But in response to that flurry of tweets about abusive groups on Facebook, I heard from dozens of other Twitter users who said they’d received the same “does not violate our community standards” reply from Facebook after reporting other groups that clearly flouted the company’s standards.

Pete Voss, Facebook’s communications manager, apologized for the oversight.

“We’re sorry about this mistake,” Voss said. “Not removing this material was an error and we removed it as soon as we investigated. Our team processes millions of reports each week, and sometimes we get things wrong. We are reviewing this case specifically, including the user’s reporting options, and we are taking steps to improve the experience, which could include broadening the scope of categories to choose from.”

Facebook CEO and founder Mark Zuckerberg testified before Congress last week in response to allegations that the company wasn’t doing enough to halt the abuse of its platform for things like fake news, hate speech and terrorist content. It emerged that Facebook already employs 15,000 human moderators to screen and remove offensive content, and that it plans to hire another 5,000 by the end of this year.

“But right now, those moderators can only react to posts Facebook users have flagged,” writes Will Knight, for Technologyreview.com.

Zuckerberg told lawmakers that Facebook hopes expected advances in artificial intelligence or “AI” technology will soon help the social network do a better job self-policing against abusive content. But for the time being, as long as Facebook mainly acts on abuse reports only when it is publicly pressured to do so by lawmakers or people with hundreds of thousands of followers, the company will continue to be dogged by a perception that doing otherwise is simply bad for its business model.

Update, 1:32 p.m. ET: Several readers pointed my attention to a Huffington Post story just three days ago, “Facebook Didn’t Seem To Care I Was Being Sexually Harassed Until I Decided To Write About It,” about a journalist whose reports of extreme personal harassment on Facebook were met with a similar response about not violating the company’s Community Standards. That is, until she told Facebook that she planned to write about it.

Tags: abuse reporting, Facebook, Pete Voss

You can skip to the end and leave a comment. Pinging is currently not allowed.