Otter’s new app lets you record, transcribe, search and share your voice conversations

An app called Otter, launching today, wants to make it as easy to search your voice conversations as it is to search your email and texts. The idea to create a new voice assistant focused on transcribing everyday conversations – like meetings and interviews – comes from Sam Liang, the former Google architect who put the blue dot on Google Maps, then later sold his next company, location platform Alohar Mobile to Alibaba.

Along with a team that hails from Google, Facebook, Nuance, Yahoo, as well as Stanford, Duke, MIT and Cambridge, Liang’s new company AISense has been developing the technology underpinning Otter over the past two years.

Essentially, a voice recorder that offers automatic transcription, Otter is designed to be able to understand and capture long-form conversations that take place between multiple people.

This is a different sort of voice technology than what’s been developed today for voice assistance – as with Alexa or Google Assistant.

“The existing technologies are not good enough for human-to-human conversations,” explains Liang. “Google’s voice API has been trained to optimize voice search,” he says, adding that when people talk to voice assistants, it’s typically only one person talking and they tend to speak more slowly and clearly than usual. They also often ask shorter questions, like “what’s the weather?,” not carry on long conversations.

“Human meetings are much more complicated,” Liang says. “It usually involves at least two people, and the people could talk for an hour. It’s a long-form conversation.”

With Otter, the goal is to capture those conversations – meetings, interviews, lectures, etc. – and turn them into a searchable archive where everything said is immediately transcribed by AISense’s software.

Today, this is possible through Otter’s new mobile app for iOS and Android, as well as a web interface that also supports file uploads for instant transcriptions.

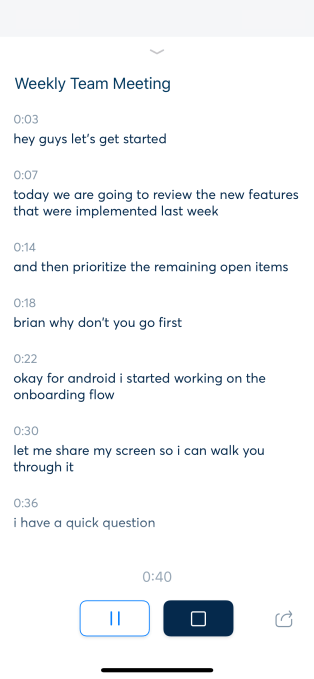

To use the app, there’s nothing you have to do beyond pressing the “record” button. The voice conversation is recorded, then made available for playback with the audio synced up with the transcribed text. You can also share the recording with others right from the app. The data Otter creates is stored in an encrypted format in the cloud.

The entire technology stack, including speech recognition, was built in-house. The company is not using existing speech recognition APIs, because they wanted to improve the accuracy, and optimize for multiple speakers, says Liang.

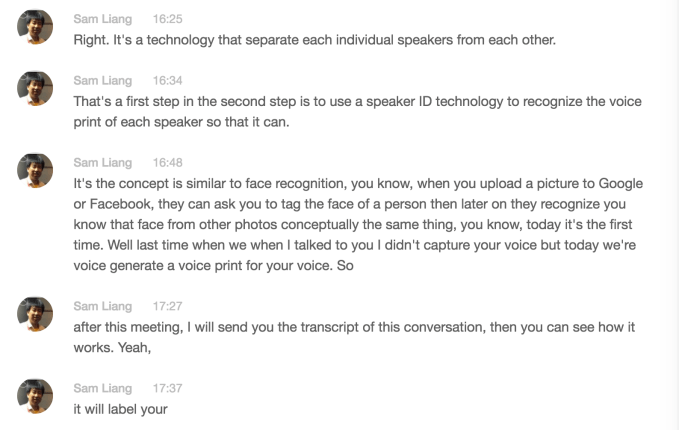

To identify when someone else starts talking, AISense uses a technology called diarization to separate each individual speaker; it then generates a voice print for each person’s voice. Broadly speaking, this is like the voice equivalent to facial recognition, with the voice print being used to identify the speaker going forward.

Liang says building a system like Otter’s wasn’t possible before.

“Four years ago, there were tremendous advances in deep learning and A.I., and suddenly, the accuracy became much higher,” he notes. “It also requires a lot of CPU power, GPU power, and a lot of storage…these became much more affordable today compared to five or ten years ago,” Liang adds.

The system, at launch, is not perfect, but shows much potential.

The AI technology was able to differentiate between speakers as promised, from what I’ve seen in limited testing, but it doesn’t catch every word of a conversation. It also misses the exact word at times, too – for example, dropping the “s” off a word like “helps,” and recording it as “help.”

Reading back through the transcription reminds me of reading a transcribed voicemail on iPhone – you get the gist of what was being said, but you have to play it back to truly understand the message.

That said, Otter was able to function in real-world environments. I tested it, for example, in a coffee shop with music playing, and it was still able to capture what was said to some extent.

The resulting transcript, however, breaks up the speech oddly. Sentences are cut off right in the middle with the next line in the transcript continuing the sentence on a new line. This makes it more difficult to read back through the transcription because our mind is trained to see a new line as a new paragraph – or at least, a pause.

But the system is useful for just getting to the right part of a long recording, so you can then more carefully transcribe a key part or quote, for example.

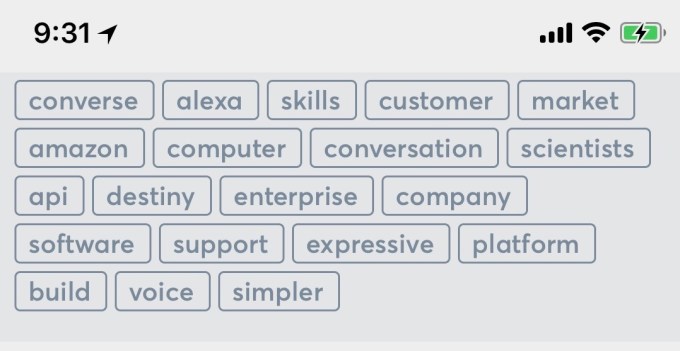

What I did like was the tag cloud at the top of the transcript, where Otter identified words that were used a lot in a conversation. You could click on these words to jump to that part of the transcription.

Liang envisions a number of potential use cases for AISense’s technology, including in enterprise, health care, education, and more.

The company has already licensed its transcription technology to web conferencing platform Zoom, but the goal for now is not to generate revenue through a licensing business, but an enterprise version of Otter that will offer more controls, as well as a premium tier for the currently free version of the consumer app.

A future release will allow for recording phone calls, but for now, the app focuses on in-person conversations.

AISense, to date, has raised $13 million in funding. Horizons Ventures – a backer of Viv, DeepMind, Siri, Slack, and others – led the $10 million Series A. Also participating were Bridgewater Associates, i-Hatch Ventures, MetaLab, Jay Markley, and Boston investors Jim Pallotta and Stu Porter.

Seed investors include Tim Draper through Draper Associates and Draper Dragon; Dave Morin through Slow Ventures; David Cheriton; SV Tech Ventures, Danhua Capital, and 500 Startups.

Otter is live today on iOS, Android, and web.

Featured Image: Tero Vesalainen/Getty Images