These psychedelic stickers blow AI minds

Machine learning systems are very capable, but they aren’t exactly smart. They lack common sense. Taking advantage of that fact, researchers have created a wonderful attack on image recognition systems that uses specially-printed stickers that are so interesting to the AI that it completely fails to see anything else. Why do I get the feeling these may soon be popular accessories?

Computer vision is an incredibly complex problem, and it’s only by cognitive shortcuts that even humans can see properly — so it shouldn’t be surprising that computers need to do the same thing.

One of the shortcuts these systems take is not assigning every pixel the same importance. Say there’s a picture of a house with a bit of sky behind it and a little grass in front. A few basic rules make it clear to the computer that this is not a picture “of” the sky or the grass, despite their presence. So it considers those background and spends more cycles analyzing the shape in the middle.

A group of Google researchers wondered (PDF): what if you messed with that shortcut, and made it so the computer would ignore the house instead, and focus on something of their choice?

They accomplished it by training an adversary system to create small circles full of features that distract the target system, trying out many configurations of colors, shapes, and sizes and seeing which causes the image recognizer to pay attention. Specific curves that the AI has learned to watch for, combinations of color that indicate something other than background, and so on.

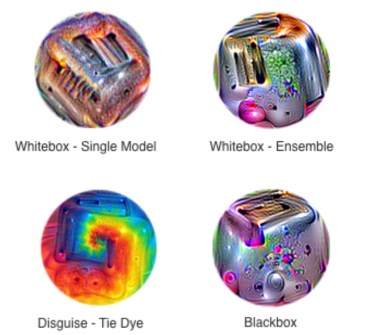

Eventually out comes a psychedelic swirl like those shown here.

Eventually out comes a psychedelic swirl like those shown here.

Put it next to another object the system knows, like a banana, and it will immediately forget the banana and think the picture is “of” the swirl. The names in the images are different approaches to creating the sticker and merging it with existing imagery.

This is done on a system-specific, not image-specific basis — meaning the resultant scrambler patch will generally work no matter what the image recognition system is looking at.

What could be done with these? Stick a few on your clothes or bag and maybe, just maybe, that image classifier at the airport or police body cam will be distracted enough that it doesn’t register your presence. Of course, you’d have to know what system was running on it, and test a few thousand variations of the stickers — but it’s a possibility.

Other attempts to trick computer vision systems have generally relied on making repeated small changes to images to see if with a few strategically placed pixels an AI can be tricked into thinking a picture of a turtle is in fact a gun. But these powerful, highly localized “purturbations,” as the researchers call them, constitute a different and very interesting threat.

Our attack works in the real world, and can be disguised as an innocuous sticker. These results demonstrate an attack that could be created offline, and then broadly shared…

Even if humans are able to notice these patches, they may not understand the intent of the patch and instead view it as a form of art. This work shows that focusing only on defending against small perturbations is insufficient, as large, local perturbations can also break classifiers.

The researchers presented their work at the Neural Information Processing Systems conference in Long Beach.