This facial recognition system tracks how you’re enjoying a movie

As moviemaking becomes as much a science as an art, the moviemakers need to ever-better ways to gauge audience reactions. Did they enjoy it? How much… exactly? At minute 42? A system from Caltech and Disney Research uses a facial expression tracking neural network to learn and predict how members of the audience react, perhaps setting the stage for a new generation of Nielsen ratings.

The research project, just presented at IEEE’s Computer Vision and Pattern Recognition conference in Hawaii, demonstrates a new method by which facial expressions in a theater can be reliably and relatively simply tracked in real time.

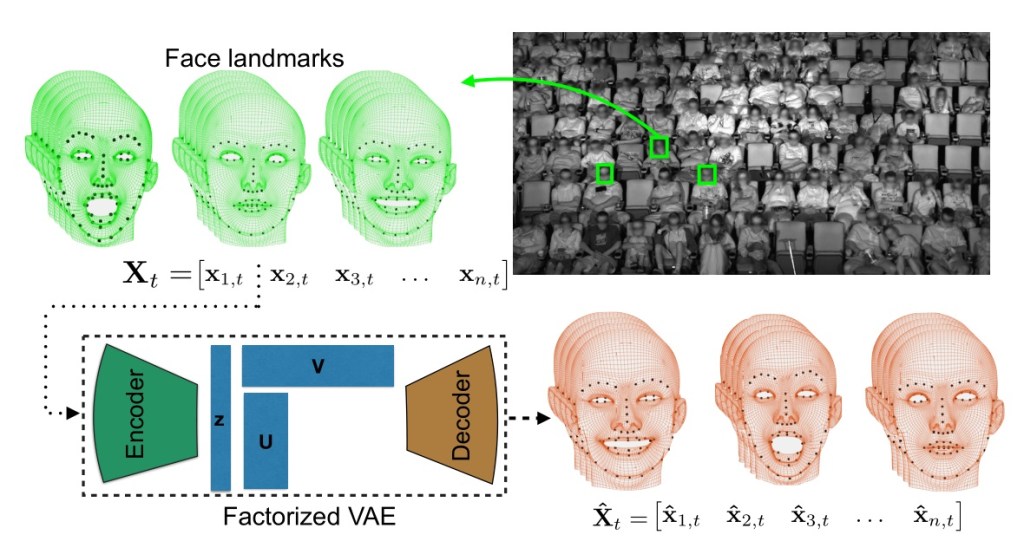

It uses what’s called a factorized variational autoencoder — the math of it I am not even going to try to explain, but it’s better than existing methods at capturing the essence of complex things like faces in motion.

The team collected a large set of face data by recording audiences of hundreds watching movies (Disney ones, naturally). An infrared hi-def camera captures everyone’s motions and faces, and the resulting data — 16 million or so data points — was fed to the neural network.

Once it had finished training, the team set the system on watching audience footage in real time and attempting to predict the expression a given face would make at various points. They found that it took about 10 minutes to warm up to the audience, so to speak, and after that reliably predicted laughs and smiles (no crying or jump scares tracked yet, it seems).

Once it had finished training, the team set the system on watching audience footage in real time and attempting to predict the expression a given face would make at various points. They found that it took about 10 minutes to warm up to the audience, so to speak, and after that reliably predicted laughs and smiles (no crying or jump scares tracked yet, it seems).

Of course, this is just one application of a technology like this — it could be applied in other situations like monitoring crowds, or elsewhere interpreting complex visual data in real time.

“Understanding human behavior is fundamental to developing AI systems that exhibit greater behavioral and social intelligence,” said Caltech’s Yisong Yue in a news release. “For example, developing AI systems to assist in monitoring and caring for the elderly relies on being able to pick up cues from their body language. After all, people don’t always explicitly say that they are unhappy or have some problem.”

Featured Image: Caltech / Disney Research